Demystifying Technical SEO: 10 Tips for Success

Last updated on Saturday, November 11, 2023

Technical SEO is an essential part of any website's success. It involves optimizing your website for search engines, and it also includes practices meant to improve user experience.

Does it look too complicated? Worry not!

With the help of these ten tips, you can ensure that your website is optimized for search engine crawlers and provides a seamless experience for your audience.

#1. Ensuring Crawlability and Indexability

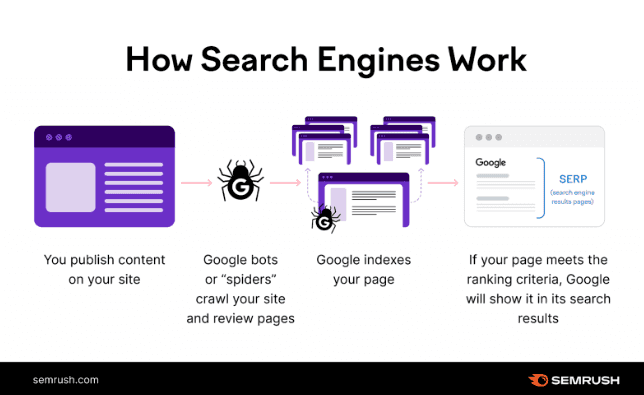

The crawl budget, crawlability, and indexability are important concepts to understand when optimizing a website for search engines.

These factors affect how easily and thoroughly search engines can access and store your website's information.

Source: Semrush

Crawl Budget

Crawl budget refers to the number of pages a search engine bot will crawl on your site during each visit. This is determined by multiple factors, such as the size and quality of your website, the frequency of updates, and the server's ability to handle crawl requests.

If you have a larger website with frequent updates, it is important to make sure that your server can handle the increased crawl rate. This can be done by optimizing your website's performance and making sure there are no technical errors that could slow down or block crawl requests.

Crawlability

Crawlability refers to how easily search engine bots can access and navigate your website. It is affected by factors such as site structure, internal linking, and the use of sitemaps. A well-structured website with clear navigation and internal linking will make it easier for search engine bots to crawl and index your pages.

Indexability

Indexability refers to whether or not a search engine bot can store and retrieve your website's information in its database. If a page is not indexable, it will not show up in search results. The most common reasons for indexability issues include duplicate content, broken links, and technical errors.

To ensure indexability, it is important to regularly check for duplicate content and remove it or use canonical tags to indicate the preferred version of a page. Broken links should also be fixed or redirected to avoid indexing errors. A frequent site audit can help identify any technical issues that may affect indexability.

Here are some tips to optimize the process:

Tip 1. Optimize robots.txt

Robots.txt is a file that tells search engine bots which pages they should or shouldn't crawl. It's important to optimize this file to ensure that the bots are only crawling and indexing relevant pages on your website.

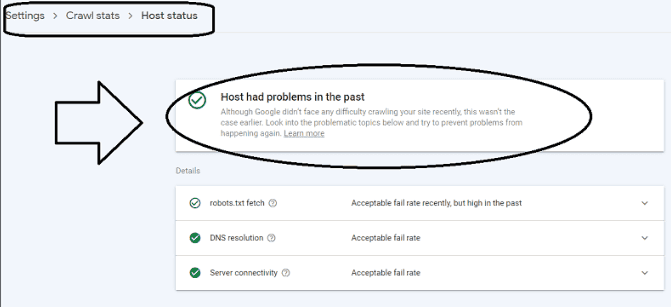

Tip 2. Check for crawl errors

Regularly check for crawl errors in Google Search Console and fix them promptly. These errors can prevent your content from being indexed, so it's crucial to address them as soon as possible.

#2. Implementing XML Sitemaps

An XML sitemap is a file that lists all the pages on your website and their relationship to each other. It helps search engine bots understand the structure of your site and can improve crawl efficiency.

How to create an XML sitemap:

Use an online sitemap generator like XML-Sitemaps.com (or an SEO plugin like Yoast)

Upload the generated sitemap to your website's root folder

Submit the sitemap to Google Search Console and Bing Webmaster Tools

Tips for optimizing XML sitemaps:

Keep it updated: Ensure that your XML sitemap is regularly updated whenever new pages or content are added to your website.

Limit the number of URLs: A single XML sitemap should not contain more than 50,000 URLs or be larger than 50MB.

Use the

tag: This tag indicates when a page was last modified and can help search engines prioritize crawling. Include only important pages: Your XML sitemap should only include pages that you want to be indexed by search engines.

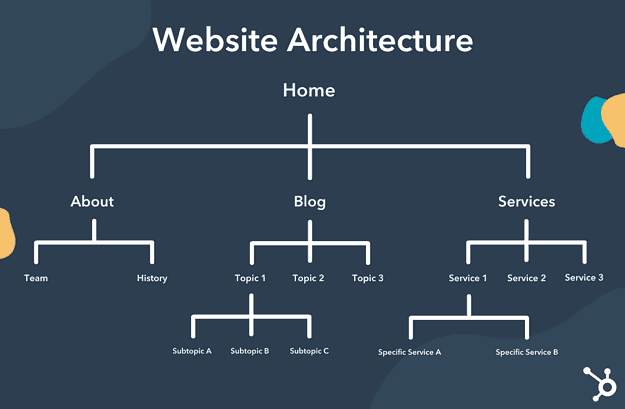

#3. Using SEO-Friendly URL Structures

Your website's URL structure plays a crucial role in both user experience and search engine optimization.

A clear and descriptive URL can help users understand what the page is about, while also providing important information to search engines.

Source: Hubspot

Here are some tips for creating SEO-friendly URLs:

Use relevant keywords: Include keywords related to the content on the page in the URL.

Keep it short and simple: A shorter URL is easier to read and remember for both users and search engines.

Use hyphens to separate words: Hyphens are preferred by search engines over underscores or other characters.

Avoid using numbers or special characters: These can make a URL look confusing and difficult to understand.

#4. Optimizing Page Load Speed

Page load speed is an important factor in both user experience and search engine rankings.

A slow-loading website can negatively impact user engagement and lead to a higher bounce rate.

Here are some tips for improving it:

Optimize images: Compress images to reduce their file size without sacrificing quality.

Enable browser caching: This allows the browser to store files from your website, reducing load time for repeat visitors.

Minify code: Remove unnecessary characters and spaces from HTML, CSS, and JavaScript files to reduce their size.

Choose a reliable hosting provider: Make sure your website is hosted on a server with good performance and uptime.

#5. Utilizing Schema Markup

Structured data markup is code that helps search engines understand the content on your website. It provides additional context to search engine bots and can result in rich snippets in search results, which can improve click-through rates.

Here are some ways to implement it on your website:

Use schema markup: Schema.org provides a standardized way of adding structured data to web pages.

Include organization and contact information: Adding schema markup for your business's name, address, and phone number can improve local SEO.

Add product details: If you have an e-commerce website, adding structured data for products can help display important information like price, availability, and reviews in search results.

#6. Implementing Secure HTTPs

HTTPs is a secure version of the HTTP protocol. It ensures that any data exchanged between a browser and a website is encrypted, providing an added layer of security for users.

In addition to improving website security, it can also have a positive impact on search engine rankings.

Here are some tips for implementing it on your website:

Obtain an SSL certificate: This is necessary to enable HTTPs on your website.

Redirect all HTTP URLs to their corresponding HTTPS version: This ensures that all traffic is encrypted and that there aren't any duplicate versions of your website indexed by search engines.

#7. Managing Duplicate Content

Duplicate content refers to identical or very similar content on different pages of your website. It can negatively impact search engine rankings as it creates confusion for search engines trying to determine the most relevant page.

Here are some tips for managing duplicate content:

Create unique and valuable content: This is pretty straightforward. Just write original, in-depth, quality content.

Use canonical tags: This tells search engines which version of a page is the primary one and should be indexed.

Implement redirects: If you have multiple versions of the same content, redirect them to a single version using 301 redirects.

#8. Learning About NoIndex Tag

The noindex tag instructs search engines not to index a particular page or piece of content.

This can be useful for pages with duplicate or low-quality content, temporary landing pages, or private content that you don't want publicly available.

Here are some tips for using it effectively:

Use the noindex tag sparingly: Only use it on pages or content that you don't want to be indexed.

Use the robots.txt file in conjunction with the noindex tag: By adding a disallow directive to the robots.txt file, you can prevent search engines from even crawling the page.

#9. Ensuring Mobile-Friendliness

With more and more people using mobile devices to browse the internet, it's essential to have a mobile-friendly website for both user experience and search engine optimization.

Here are some tips for ensuring your content is well-optimized for any kind of device:

Use responsive design: This means that your website's layout will adjust to fit different screen sizes, providing a better user experience.

Optimize images for mobile: Make sure images are scaled appropriately and compressed for faster loading on mobile devices.

Avoid using Flash or pop-ups: These can be difficult to navigate on mobile devices and can negatively impact user experience.

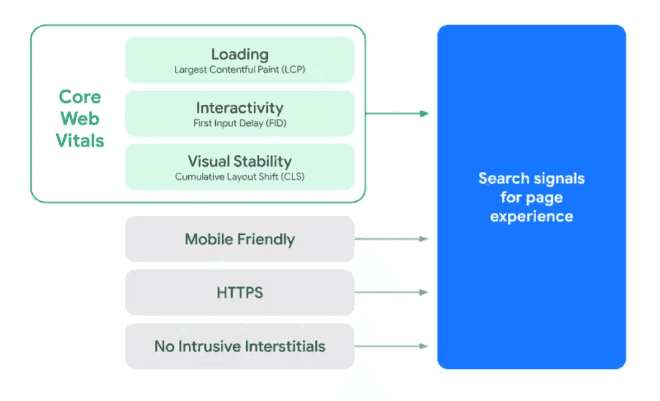

#10. Optimizing for Core Web Vitals

Core Web Vitals are a set of metrics that measure the overall user experience on a website, including load time, interactivity, and visual stability.

They became a ranking factor in Google's algorithm in 2021.

Here are some tips for optimizing your website for these metrics:

Improve page load speed: This is the largest contributing factor to Core Web Vitals, so implementing tips from tip #4 can have a significant impact.

Ensure responsiveness and interactivity: Make sure your website is interactive and responds quickly to user input, such as clicks or scrolling.

Avoid layout shifts: Layout shifts occur when elements on the page move unexpectedly. To prevent this, make sure all elements have specified sizes and use CSS animations instead of JavaScript to avoid layout shifts.

Conclusion

Technical SEO may seem daunting, but it's crucial for achieving a good search engine ranking and growing your business.

Hopefully, by following these tips, you can improve your website's technical aspects and provide a better user experience, ultimately leading to more traffic and conversions.

Keep in mind that technical SEO is an ongoing process, and it's essential to regularly monitor and update your website to stay ahead of the constantly evolving search engine algorithms.

Article by:

Erik Emanuelli

Blogger

Erik Emanuelli has been in the online marketing game since 2010. He’s now sharing what he has learned on his website. Find more insights about SEO and blogging here.